Opinion

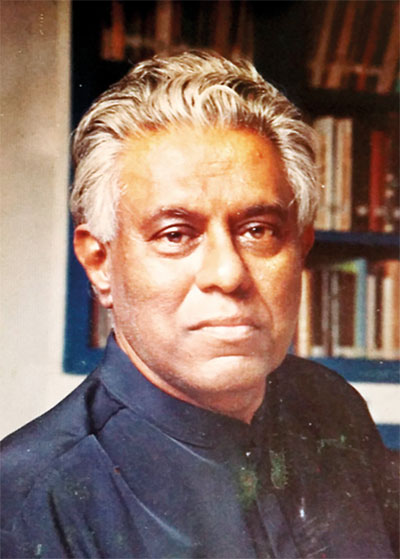

Emplacing Senake Bandaranayake’s archaeology in an intellectual tradition

by Prof Jagath Weerasinghe

Former Director of Postgraduate Institute of Archaeology, University of Kelaniya

(Following is the text of speech delivered by Prof Jagath Weerasinghe the seventh commemoration of eminent archaeologist Professor emeritus Senake Bandaranayake at the auditorium of Faculty of Social Sciences of the University of Kelaniya. On March 08)

(Continued from yesterday)

Bandaranayake’s thinking in archaeology and art history

As an intellectual, Bandaranayake played many roles; many guises: archaeologist, art historian, academic administrator, institution builder, modern art writer or art critic, poet and social critic. In this talk I would only attempt at emplacing his archaeology and art history within a school of thought, an intellectual tradition, in a “gurukulaya”.

Like many of his generation, he did not study archaeology as an undergraduate, but only as a postgraduate; his academic training is in English Language, Anthropology, and History of Architecture. His PhD research on the viharas of Anuradhapura, published in his monumental book, “Sinhalese Monastic Architecture’ of 1974, is quintessential archaeological research. An archaeologist must rely on the martial record from the past for his explanations and interpretations and is required to acquire a high degree of acumen in seeing and reading visual attributes that constitute material culture and its material and spatial setting, and to discern similarities, patterns, and specificities to formulate conceptualizing frameworks capable of incorporating both the old and new material towards newer or adjusted interpretations. This is exactly what Bandaranayake has achieved in this research; he is a master synthesizer of similarities and differences into cohesive frameworks, theoretical arguments, and explanatory models.

Sigiriya project

In his ‘Sigiriya Project: First Archaeological Excavation and Research Report’ of 1984, and the two publications that ensued from the Sigiriya-Dambulla Settlement Archaeology programme, published in 1990 and 1994 we come across a more mature form of Bandaranayake the archaeologist. Here is an archaeologist determined to exploit the full potential of archaeological methods and theories that were current in the last decades of the 20th century to discover archaeological realities that can account for the life of the people in the margins of ancient kingdoms. In these three publications, especially with the ones that came from the Sigiriya-Dambulla research program, we see an archaeologist consciously constructing a research design that moves away from monuments that extol the royalty and the powerful of bygone days. As recorded in the Sigiriya Project report mentioned above, there is a marked change in his approach and handling of the idea of archaeology. At Sigiriya, he considers archaeological sites as an integral part of a large environmental-ecological entity and sees this complex entity with its entire gamut of complex archaeological realities. He insists on taking Sigiriya in its wider archaeological context. He sees the importance of archaeological deciphering of its hinterland in making archaeological sense of Sigiriya itself. Said differently, he is moving away from focusing on monuments and towards a different kind of archaeological data to make archaeological sense of the monument itself.

Here, at the very outset of the report, he expresses his concerns and his main beef with Sri Lankan archaeology, saying, “One of the traditional preoccupations of Sri Lankan archaeology, the discovery of “museum pieces,” is merely incidental to the Sigiriya programme.” (SPR, p. 3). This is remarkable. Here, with this line, Bandaranayake appropriately declares the need to put an end to the antiquarian impulse, a scourge in Sri Lankan archaeology. This movement away from an archaeology enamored with “museum pieces” and monuments—an archaeology motivated by antiquarian impulses and urges—is carried out further, and emphatically mentioned in his introductory chapter of the “Approaches to the Settlement Archaeology of the Sigiriya-Dambulla” book. He says, “the present study is an exception in the “archaeology of the village” or “the archaeology of the “small people” …”. Instead of focusing on monuments or unique archeological structures or royal and official inscriptions, Bandaranayake directs his research team to looking at the larger archaeological system that he thinks to constitute the archaeological realities of the Early and Middle Historical Period village life in the study region. He saw the research programme at Sigiriya-Dambulla region as “the first attempt expressly directed at studying in some detail the archaeology of ancient village system in Sri Lanka.”

Team work

The achievements of the research project are several, and Bandaranayake underlines, in his own words, “smooth formation of an effective and multi-functional field team.’ as one of the 3 achievements. The main value that saw to the success of this project, Bandaranayake indicates, is the teamwork. Bandaranayake claims that “An archaeological project of this nature is only possible through teamwork.”. It is necessary to dwell on this idea of teamwork in archaeology here. Teamwork in archaeology, especially in fieldwork, in excavations is not just good politics of giving everyone an opportunity to participate in an archaeological excavation but is a scientific requirement. Any archaeological excavation done by a single archaeologist with two or three students is not ‘scientific archaeology’, its wrong and bad archaeology, because excavation displaces archaeological data, and if the excavation is done by a single archaeologist, then the findings of such excavations have not been processed through scientific verification protocols at the dig. Such single-archaeologist-excavations yield no scientific data. Scientific archaeology can only happen in a discursive environment in the excavation pit.

Faith in archaeology

In doing archaeology, Bandaranayake was motivated by the belief that archaeology alone can provide us with “substantive and quantitative data regarding the nature, the complexity, and the patterning of rural settlements and settlement networks during the pre-modern period.” To actualize this faith in archaeology he looked at not monuments, single sites or museum pieces, but on a system of sites spread on a landscape. He also emphasized the need for teamwork; multifunctional-field teams; In short, Bandaranayake looked at archaeological past, not as events, but as network of social relations that produced an archaeological landscape and he saw doing archaeology as a discursive performance of many voices of many archaeologists and specialists. He was not interested in the cultural history of one site as an event from the past, as Christopher Tilly and Michel Shanks has noted in 1987, in general on the archeology of the l970s in Europe and North America, but in ways of linking objects and their relationships to the social conditions of their creation in the past.

So, what is this archaeology that Bandaranayake promoted? What is this approach to archaeology? What are the precedents for this kind of approach to archaeology? This is an archaeology that rebuts signs of antiquarianism in an organised manner. This is an archaeology that demands a research design as a prerequisite for any field work programme. This is an archeology that necessitates a certain level of critical self-consciousness and self-reflexivity on the part of the archaeologist. What kind of intellectual tradition is he tapping for his mode/s of thought production in archaeology? What is he NOT looking at?

Intellectual cues

The history of archaeological thought, as written by Bruce Trigger, Tilly and Shanks, and many others, would show us that Bandaranayake had taken inspirational and intellectual cues from two schools of thought. One is Cambridge, and the other is the New Archaeology (of Lewis R. Binford) of North America. Bandaranayake’s archaeological thinking fits well with that of David Clarke and Colin Renfrew, both of whom are from Cambridge University and Binford. Clarke, Renfrew, and Binford believed that archaeology could be a scientific and objective study of the past (a proposition that has been seriously challenged by many in the late 20th and 21st century). However, it must also be noted here that it was through Renfrew that Bandaranayake found links between Cambridge and New Archaeology. Renfrew probably provided Bandaranayake with the confidence to take archaeology as a strong scientific discipline, in the sense that “objective explanations” are not only discernible, but also a necessary commitment in archaeology. As such, Bandaranayake, like Renfrew, would opt for an explanation of archaeological phenomena rather than interpret them. Bandaranayake did not venture into the interpretive archeology that ensued from Cambridge in the late 1970s and 1980s. He remained faithful to scientific archaeology, so to speak.

What we see then is that Bandaranayake was attracted to a certain trend in global archaeology that had begun to take shape in late 1960s and early 1970s. It seems necessary to trace this history of archaeology that changed the course of archaeology, the publications that challenged the lack of self-criticality in archaeology by way of four important publications. This will help us to emplace Bandaranayake’s archaeology within a broad historical development that first swept through Britain and North America. There are four publications, that came between 1962 and 1973, that precipitated this change. In a decade, it seems, that everything in archaeology changed forever. They also have a distant precursor in 1948 in Walter Taylor’s publication, ‘A Study of Archaeology’. The three publications that concern us here are, Lewis R. Binford’s famous 1962 article, “Archaeology as Anthropology,” that signaled the birth of ‘New Archaeology’ or Processual Archaeology in the USA. Then in 1968, David Clark published his much-discussed book, Analytical Archaeology, in which he argued that archaeology is not history and archaeological data are not historical data. It is necessary to note that this claim was also made by Walter Taylor in 1948. Clarke proposed this claim by describing and defining the nature of archaeology. Renfrew’s 1972 publication, The Emergence of Civilization. emphasized the idea that the past is not just events, past for archaeology is social relations that produced certain kind of objects. And, finally in 1973 Clarke publishes an article in Antiquity journal, with an insightful title, “Archaeology: the loss of innocence”, where he argued for the necessity of research design for archaeological research.

Change

What we can notice then is that by the late 1960s and early 1970s, something radical was happening in archaeology. A new archaeology was struggling to be born and to claim its hegemonic position in the world of archaeology. This change demands archaeologists to move away from the popular characteristic of archaeology as “an undisciplined empirical discipline. A discipline lacking a scheme of systematic and ordered study based upon declared and clearly defined models and rules of procedure. It further lacks a body of central theory capable of synthesising the general regularities within its data in such a way that the unique residuals distinguishing each particular case might be quickly isolated and easily assessed.”. This is the opening line of Clarke’s 1968 book. Clarke is attacking the antiquarian motivations in archaeology and the absence of theoretical discussions in archaeology. In the same paragraph, Clarke also condemns the habitual practice of making taxonomies based on undefined concepts, and he ends the paragraph by claiming, “Lacking an explicit theory defining these entities and their relationships and transformations in a viable form, archaeology has remained an intuitive skill – an inexplicit manipulative dexterity learned by rote.” From the very beginning, Bandaranayake’s archaeology decided not to do this, making simple taxonomies based on attributes and naming them and passing such naming off as explanations or interpretations.

Clarke also makes another important claim in this book, that Bandaranayake adhered to in his archaeology, which some of his students seem to have intentionally forgotten. Clarke argued, “An archaeological culture is not a racial group, nor a historical tribe, nor a linguistic unit, it is simply an archaeological culture. Given great care, a large quantity of first-class archaeological data, precise definition and rigorous use of terms, and a good archaeological model, then we may with a margin of error be able to identify an archaeological entity in approximate social and historical terms. But this is the best we can do, and it is in any case only one of the aims of archaeological activity.” Bandaranayake never attempted to convert his archaeological data to historical with simple historical rhetoric.

New Archaeology

If one doesn’t look closely enough, Bandaranayake’s relationship with the New Archaeology of North America is somewhat unclear. Bandaranayake seems not to have appreciated the hypothetico-deductive approach of New Archaeology. He relied on inductive reasoning; he was an empiricist. However, one needs to examine more to propose a concrete idea in his hypothesis building process. But he was rather attracted to the potential of well-examined common sense in archaeological explanations, and he also recognized the importance of explicit statements of how explanations are made from data.

To end this essay on Bandaranayake, I would summarise his archeology as a project built on four convictions. He was a firm believer in the multidisciplinary nature of archaeological research. Then he emphasised the importance of teamwork in archaeology. He shunned the antiquarian impulse in archaeology, and he opted for an explanation of the archaeological record rather than interpretation. These four convictions emplace his archaeology in the school of archeological thought that emerged in the works of David Clarke and Colin Renfrew of Cambridge University. He would also have a glance fixed on New Archaeology to a considerable extent. This brief essay does not do whatsoever justice to the immense contribution that Bandaranayake made to Sri Lanakan archaeology. But I believe that this essay will demonstrate to the reader the epistemological gravity of his contribution and its importance to establish archaeology as critical and scientific practice. Currently, it’s my opinion, that Sri Lankan archaeology is caught in a doldrum, and the field is in need of a “Bandaranayake wind’ to move the discipline further.

Opinion

Child food poverty: A prowling menace

by Dr B.J.C.Perera

by Dr B.J.C.Perera

MBBS(Cey), DCH(Cey), DCH(Eng), MD(Paed), MRCP(UK), FRCP(Edin),

FRCP(Lon), FRCPCH(UK), FSLCPaed, FCCP, Hony FRCPCH(UK), Hony. FCGP(SL)

Specialist Consultant Paediatrician and Honorary Senior Fellow,

Postgraduate Institute of Medicine, University of Colombo, Sri Lanka.

Joint Editor, Sri Lanka Journal of Child Health

In an age of unprecedented global development, technological advancements, universal connectivity, and improvements in living standards in many areas of the world, it is a very dark irony that child food poverty remains a pressing issue. UNICEF defines child food poverty as children’s inability to access and consume a nutritious and diverse diet in early childhood. Despite the planet Earth’s undisputed capacity to produce enough food to nourish everyone, millions of children still go hungry each day. We desperately need to explore the multifaceted deleterious effects of child food poverty, on physical health, cognitive development, emotional well-being, and societal impacts and then try to formulate a road map to alleviate its deleterious effects.

Every day, right across the world, millions of parents and families are struggling to provide nutritious and diverse foods that young children desperately need to reach their full potential. Growing inequities, conflict, and climate crises, combined with rising food prices, the overabundance of unhealthy foods, harmful food marketing strategies and poor child-feeding practices, are condemning millions of children to child food poverty.

In a communique dated 06th June 2024, UNICEF reports that globally, 1 in 4 children; approximately 181 million under the age of five, live in severe child food poverty, defined as consuming at most, two of eight food groups in early childhood. These children are up to 50 per cent more likely to suffer from life-threatening malnutrition. Child Food Poverty: Nutrition Deprivation in Early Childhood – the third issue of UNICEF’s flagship Child Nutrition Report – highlights that millions of young children are unable to access and consume the nutritious and diverse diets that are essential for their growth and development in early childhood and beyond.

It is highlighted in the report that four out of five children experiencing severe child food poverty are fed only breastmilk or just some other milk and/or a starchy staple, such as maize, rice or wheat. Less than 10 per cent of these children are fed fruits and vegetables and less than 5 per cent are fed nutrient-dense foods such as eggs, fish, poultry, or meat. These are horrendous statistics that should pull at the heartstrings of the discerning populace of this world.

The report also identifies the drivers of child food poverty. Strikingly, though 46 per cent of all cases of severe child food poverty are among poor households where income poverty is likely to be a major driver, 54 per cent live in relatively wealthier households, among whom poor food environments and feeding practices are the main drivers of food poverty in early childhood.

One of the most immediate and visible effects of child food poverty is its detrimental impact on physical health. Malnutrition, which can result from both insufficient calorie intake and lack of essential nutrients, is a prevalent consequence. Chronic undernourishment during formative years leads to stunted growth, weakened immune systems, and increased susceptibility to infections and diseases. Children who do not receive adequate nutrition are more likely to suffer from conditions such as anaemia, rickets, and developmental delays.

Moreover, the lack of proper nutrition can have long-term health consequences. Malnourished children are at a higher risk of developing chronic illnesses such as heart disease, diabetes, and obesity later in life. The paradox of child food poverty is that it can lead to both undernutrition and overnutrition, with children in food-insecure households often consuming calorie-dense but nutrient-poor foods due to economic constraints. This dietary pattern increases the risk of obesity, creating a vicious cycle of poor health outcomes.

The impacts of child food poverty extend beyond physical health, severely affecting cognitive development and educational attainment. Adequate nutrition is crucial for brain development, particularly in the early years of life. Malnutrition can impair cognitive functions such as attention, memory, and problem-solving skills. Studies have consistently shown that malnourished children perform worse academically compared to their well-nourished peers. Inadequate nutrition during early childhood can lead to reduced school readiness and lower IQ scores. These children often struggle to concentrate in school, miss more days due to illness, and have lower overall academic performance. This educational disadvantage perpetuates the cycle of poverty, as lower educational attainment reduces future employment opportunities and earning potential.

The emotional and psychological effects of child food poverty are profound and are often overlooked. Food insecurity creates a constant state of stress and anxiety for both children and their families. The uncertainty of not knowing when or where the next meal will come from can lead to feelings of helplessness and despair. Children in food-insecure households are more likely to experience behavioural problems, including hyperactivity, aggression, and withdrawal. The stigma associated with poverty and hunger can further exacerbate these emotional challenges. Children who experience food poverty may feel shame and embarrassment, leading to social isolation and reduced self-esteem. This psychological toll can have lasting effects, contributing to mental health issues such as depression and anxiety in adolescence and adulthood.

Child food poverty also perpetuates cycles of poverty and inequality. Children who grow up in food-insecure households are more likely to remain in poverty as adults, continuing the intergenerational transmission of disadvantage. This cycle of poverty exacerbates social disparities, contributing to increased crime rates, reduced social cohesion, and greater reliance on social welfare programmes. The repercussions of child food poverty ripple through society, creating economic and social challenges that affect everyone. The healthcare costs associated with treating malnutrition-related illnesses and chronic diseases are substantial. Additionally, the educational deficits linked to child food poverty result in a less skilled workforce, which hampers economic growth and productivity.

Addressing child food poverty requires a multi-faceted approach that tackles both immediate needs and underlying causes. Policy interventions are crucial in ensuring that all children have access to adequate nutrition. This can include expanding social safety nets, such as food assistance programmes and school meal initiatives, as well as targeted manoeuvres to reach more vulnerable families. Ensuring that these programmes are adequately funded and effectively implemented is essential for their success.

In addition to direct food assistance, broader economic and social policies are needed to address the root causes of poverty. This includes efforts to increase household incomes through living wage policies, job training programs, and economic development initiatives. Supporting families with affordable childcare, healthcare, and housing can also alleviate some of the financial pressures that contribute to food insecurity.

Community-based initiatives play a vital role in combating child food poverty. Local food banks, community gardens, and nutrition education programmes can help provide immediate relief and promote long-term food security. Collaborative efforts between government, non-profits, and the private sector are necessary to create sustainable solutions.

Child food poverty is a profound and inescapable issue with far-reaching consequences. Its deleterious effects on physical health, cognitive development, emotional well-being, and societal stability underscore the urgent need for comprehensive action. As we strive for a more equitable and just world, addressing child food poverty must be a priority. By ensuring that all children have access to adequate nutrition, we can lay the foundation for a healthier, more prosperous future for individuals and society as a whole. The fight against child food poverty is not just a moral imperative but an investment in our collective future. Healthy, well-nourished children are more likely to grow into productive, contributing members of society. The benefits of addressing this issue extend beyond individual well-being, enhancing economic stability and social harmony. It is incumbent upon us all to recognize and act upon the understanding that every child deserves the right to adequate nutrition and the opportunity to thrive.

Despite all of these existent challenges, it is very definitely possible to end child food poverty. The world needs targeted interventions to transform food, health, and social protection systems, and also take steps to strengthen data systems to track progress in reducing child food poverty. All these manoeuvres must comprise a concerted effort towards making nutritious and diverse diets accessible and affordable to all. We need to call for child food poverty reduction to be recognized as a metric of success towards achieving global and national nutrition and development goals.

Material from UNICEF reports and AI assistance are acknowledged.

Opinion

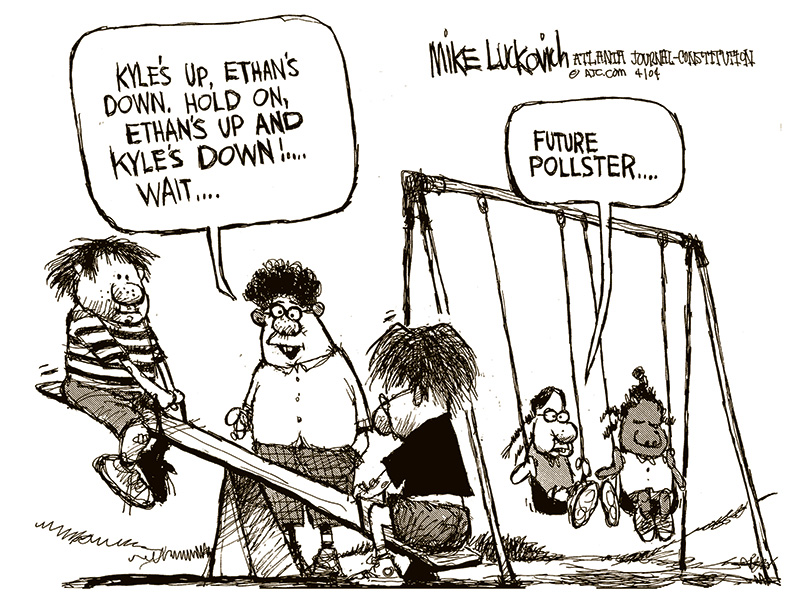

Do opinion polls matter?

By Dr Upul Wijayawardhana

The colossal failure of not a single opinion poll predicting accurately the result of the Indian parliamentary election, the greatest exercise in democracy in the world, raises the question whether the importance of opinion polls is vastly exaggerated. During elections two types of opinion polls are conducted; one based on intentions to vote, published during or before the campaign, often being not very accurate as these are subject to many variables but exit polls, done after the voting where a sample tally of how the voters actually voted, are mostly accurate. However, of the 15 exit polls published soon after all the votes were cast in the massive Indian election, 13 vastly overpredicted the number of seats Modi’s BJP led coalition NDA would obtain, some giving a figure as high as 400, the number Modi claimed he is aiming for. The other two polls grossly underestimated predicting a hung parliament. The actual result is that NDA passed the threshold of 272 comfortably, there being no landslide. BJP by itself was not able to cross the threshold, a significant setback for an overconfident Mody! Whether this would result in less excesses on the part of Modi, like Muslim-bashing, remains to be seen. Anyway, the statement issued by BJP that they would be investigating the reasons for failure rather than blaming the process speaks very highly of the maturity of the democratic process in India.

I was intrigued by this failure of opinion polls as this differs dramatically from opinion polls in the UK. I never failed to watch ‘Election night specials’ on BBC; as the Big Ben strikes ‘ten’ (In the UK polls close at 10pm} the anchor comes out with “Exit polls predict that …” and the actual outcome is often almost as predicted. However, many a time opinion polls conducted during the campaign have got the predictions wrong. There are many explanations for this.

An opinion poll is defined as a research survey of public opinion from a particular sample, the origin of which can be traced back to the 1824 US presidential election, when two local newspapers in North Carolina and Delaware predicted the victory of Andrew Jackson but the sample was local. First national survey was done in 1916 by the magazine, Literary Digest, partly for circulation-raising, by mailing millions of postcards and counting the returns. Of course, this was not very scientific though it accurately predicted the election of Woodrow Wilson.

Since then, opinion polls have grown in extent and complexity with scientific methodology improving the outcome of predictions not only in elections but also in market research. As a result, some of these organisations have become big businesses. For instance, YouGov, an internet-based organisation co-founded by the Iraqi-born British politician Nadim Zahawi, based in London had a revenue of 258 million GBP in 2023.

In Sri Lanka, opinion polls seem to be conducted by only one organisation which, by itself, is a disadvantage, as pooled data from surveys conducted by many are more likely to reflect the true situation. Irrespective of the degree of accuracy, politicians seem to be dependent on the available data which lend explanations to the behaviour of some.

The Institute for Health Policy’s (IHP) Sri Lanka Opinion Tracker Survey has been tracking the voting intentions for the likely candidates for the Presidential election. At one stage the NPP/JVP leader AKD was getting a figure over 50%. This together with some degree of international acceptance made the JVP behave as if they are already in power, leading to some incidents where their true colour was showing.

The comments made by a prominent member of the JVP who claimed that the JVP killed only the riff-raff, raised many questions, in addition to being a total insult to many innocents killed by them including my uncle. Do they have the authority to do so? Do extra-judicial killings continue to be JVP policy? Do they consider anyone who disagrees with them riff-raff? Will they kill them simply because they do not comply like one of my admired teachers, Dr Gladys Jayawardena who was considered riff-raff because she, as the Chairman of the State Pharmaceutical Corporation, arranged to buy drugs cheaper from India? Is it not the height of hypocrisy that AKD is now boasting of his ties to India?

Another big-wig comes with the grand idea of devolving law and order to village level. As stated very strongly, in the editorial “Pledges and reality” (The Island, 20 May) is this what they intend to do: Have JVP kangaroo-courts!

Perhaps, as a result of these incidents AKD’s ratings has dropped to 39%, according to the IHP survey done in April, and Sajith Premadasa’s ratings have increased gradually to match that. Whilst they are level pegging Ranil is far behind at 13%. Is this the reason why Ranil is getting his acolytes to propagate the idea that the best for the country is to extend his tenure by a referendum? He forced the postponement of Local Governments elections by refusing to release funds but he cannot do so for the presidential election for constitutional reasons. He is now looking for loopholes. Has he considered the distinct possibility that the referendum to extend the life of the presidency and the parliament if lost, would double the expenditure?

Unfortunately, this has been an exercise in futility and it would not be surprising if the next survey shows Ranil’s chances dropping even further! Perhaps, the best option available to Ranil is to retire gracefully, taking credit for steadying the economy and saving the country from an anarchic invasion of the parliament, rather than to leave politics in disgrace by coming third in the presidential election. Unless, of course, he is convinced that opinion polls do not matter and what matters is the ballots in the box!

Opinion

Thoughtfulness or mindfulness?

By Prof. Kirthi Tennakone

ktenna@yahoo.co.uk

Thoughtfulness is the quality of being conscious of issues that arise and considering action while seeking explanations. It facilitates finding solutions to problems and judging experiences.

Almost all human accomplishments are consequences of thoughtfulness.

Can you perform day-to-day work efficiently and effectively without being thoughtful? Obviously, no. Are there any major advancements attained without thought and contemplation? Not a single example!

Science and technology, art, music and literary compositions and religion stand conspicuously as products of thought.

Thought could have sinister motives and the only way to eliminate them is through thought itself. Thought could distinguish right from wrong.

Empathy, love, amusement, and expression of sorrow are reflections of thought.

Thought relieves worries by understanding or taking decisive action.

Despite the universal virtue of thoughtfulness, some advocate an idea termed mindfulness, claiming the benefits of nurturing this quality to shape mental wellbeing. The concept is defined as focusing attention to the present moment without judgment. A way of forgetting the worries and calming the mind – a form of meditation. A definition coined in the West to decouple the concept from religion. The attitude could have a temporary advantage as a method of softening negative feelings such as sorrow and anger. However, no man or woman can afford to be non-judgmental all the time. It is incompatible with indispensable thoughtfulness! What is the advantage of diverting attention to one thing without discernment during a few tens of minute’s meditation? The instructors of mindfulness meditation tell you to focus attention on trivial things. Whereas in thoughtfulness, you concentrate the mind on challenging issues. Sometimes arriving at groundbreaking scientific discoveries, solution of mathematical problems or the creation of masterpieces in engineering, art, or literature.

The concept of meditation and mindfulness originated in ancient India around 1000 BCE. Vedic ascetics believed the practice would lead to supernatural powers enabling disclosure of the truth. Failing to meet the said aspiration, notwithstanding so many stories in scripture, is discernable. Otherwise, the world would have been awakened to advancement by ancient Indians before the Greeks. The latter culture emphasized thoughtfulness!

In India, Buddha was the first to deviate from the Vedic philosophy. His teachers, Alara Kalama and Uddaka Ramaputra, were adherents of meditation. Unconvinced of their approach, Buddha concluded a thoughtful analysis of the actualities of life should be the path to realisation. However, in an environment dominated by Vedic tradition, meditation residually persisted when Buddha’s teachings transformed into a religion.

In the early 1970s, a few in the West picked up meditation and mindfulness. We Easterners, who criticize Western ideas all the time, got exalted after seeing something Eastern accepted in the Western circles. Thereafter, Easterners took up the subject more seriously, in the spirit of its definition in the West.

Today, mindfulness has become a marketable commodity – a thriving business spreading worldwide, fueled largely by advertising. There are practice centres, lessons onsite and online, and apps for purchase. Articles written by gurus of the field appear on the web.

What attracts people to mindfulness programmes? Many assume them being stressed and depressed needs to improve their mental capacity. In most instances, these are minor complaints and for understandable reasons, they do not seek mainstream medical interventions but go for exaggeratedly advertised alternatives. Mainstream medical treatments are based on rigorous science and spell out both the pros and cons of the procedure, avoiding overstatement. Whereas the alternative sector makes unsubstantiated claims about the efficacy and effectiveness of the treatment.

Advocates of mindfulness claim the benefits of their prescriptions have been proven scientifically. There are reports (mostly in open-access journals which charge a fee for publication) indicating that authors have found positive aspects of mindfulness or identified reasons correlating the efficacy of such activities. However, they rarely meet standards normally required for unequivocal acceptance. The gold standard of scientific scrutiny is the statistically significant reproducibility of claims.

If a mindfulness guru claims his prescription of meditation cures hypertension, he must record the blood pressure of participants before and after completion of the activity and show the blood pressure of a large percentage has stably dropped and repeat the experiment with different clients. He must also conduct sessions where he adopts another prescription (a placebo) under the same conditions and compares the results. This is not enough, he must request someone else to conduct sessions following his prescription, to rule out the influence of the personality of the instructor.

The laity unaware of the above rigid requirements, accede to purported claims of mindfulness proponents.

A few years ago, an article published and widely cited stated that the practice of mindfulness increases the gray matter density of the brain. A more recent study found there is no such correlation. Popular expositions on the subject do not refer to the latter report. Most mindfulness research published seems to have been conducted intending to prove the benefits of the practice. The hard science demands doing the opposite as well-experiments carried out intending to disprove the claims. You need to be skeptical until things are firmly established.

Despite many efforts diverted to disprove Einstein’s General Theory of Relativity, no contradictions have been found in vain to date, strengthening the validity of the theory. Regarding mindfulness, as it stands, benefits can neither be proved nor disproved, to the gold standard of scientific scrutiny.

Some schools in foreign lands have accommodated mindfulness training programs hoping to develop the mental facility of students and Sri Lanka plans to follow. However, studies also reveal these exercises are ineffective or do more harm than good. Have we investigated this issue before imitation?

Should we force our children to focus attention on one single goal without judgment, even for a moment?

Why not allow young minds to roam wild in their deepest imagination and build castles in the air and encourage them to turn these fantasies into realities by nurturing their thoughtfulness?

Be more thoughtful than mindful?